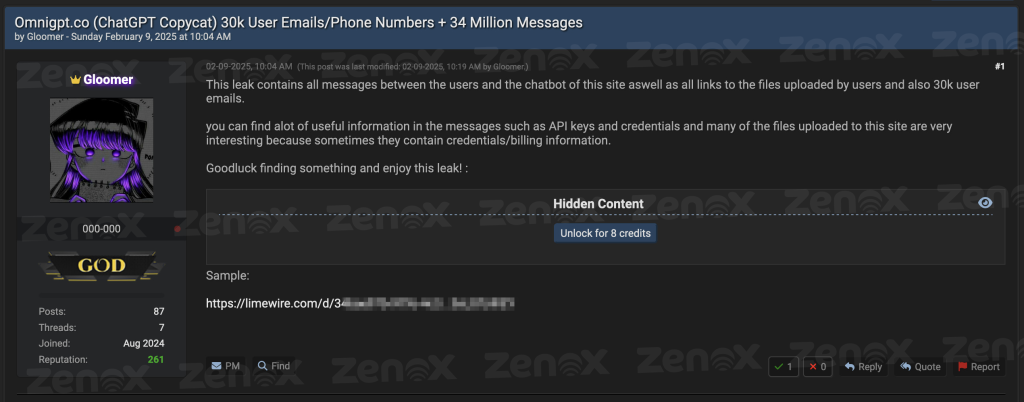

Last Monday (February 9), a user on BreachForums identified as “Gloomer” claimed to have compromised OmniGPT, a widely used Artificial Intelligence (AI) aggregator that provides access to various models, including ChatGPT-4, Claude 3.5, Gemini, and Midjourney.

The malicious actor claims to have obtained approximately 30,000 email addresses, user phone numbers, and over 34 million lines of conversations, as well as all links to files uploaded by users on the platform. A sample of the leaked data set was made available in the same forum post.

The full leaked material is publicly available on BreachForums and can be accessed for 8 credits — an internal forum currency used by participants to acquire leaked materials and other restricted content.

Leak Structure

The ZenoX Threat Intelligence team conducted a thorough investigation of the leaked material. The initial package consisted of a 534 MB ZIP file which, once unzipped, revealed approximately 1.8 GB of data distributed across four distinct files. The file “Files.txt” contained all URLs of the files users had uploaded to the platform, potentially exposing confidential documents, proprietary code, and other sensitive materials. The file “messages.txt,” the largest in the set, contained more than 34 million lines of user conversations with the various AI models, possibly including sensitive information.

The remaining two files contained personal user information, including email addresses, unique identifiers, and phone numbers, creating a complete catalog that could be used for targeted attacks or social engineering campaigns.

Technical Analysis

To understand the extent and implications of the leak, the analysis of the leaked material was divided into two parts. A Golang script was developed to incorporate both approaches, each with its own limitations and technical challenges.

1. Credential Detection

The first stage of the analysis focused on identifying potential credentials and access tokens. A list of 87 regular expression (regex) patterns was used, covering AWS keys, GitHub access tokens, Stripe credentials, and others commonly used in software development. The analysis yielded numerous credentials, notably:

- 206 Hugging Face tokens, potentially allowing access to proprietary machine learning models and training data. The high incidence suggests significant use of the platform for AI development.

- 369 JWT tokens, posing a high risk due to the nature of the information they may contain and their potential use in authenticating various systems.

- In cloud environments, 38 AWS Access Keys and 46 Google Cloud Platform service accounts were identified. These credentials are particularly concerning given the broad access they can provide to critical infrastructure resources.

- 28 Stripe keys (14 public, 14 secret) were found, indicating exposure of payment and financial processing systems.

| Credential Type | Quantity |

| JWT token | 369 |

| Hugging Face Access Token | 206 |

| Google (GCP) Service-account | 46 |

| AWS Access Key ID | 38 |

| Stripe Publishable Key | 25 |

| Discord client secret | 15 |

| AWS Secret Access Key | 14 |

| Shopify token | 14 |

| Stripe Secret Key | 14 |

| Asymmetric Private Key | 13 |

| GitLab Personal Access Token | 11 |

| GitHub Personal Access Token | 3 |

| GitHub Fine-grained PAT | 2 |

| SendGrid API token | 2 |

| Slack Webhook | 1 |

| Adobe Client ID | 1 |

2. Sector Analysis

The second stage focused on a sector-based analysis of the leak, using a list of the 1,000 largest Brazilian companies to gauge the impact on different market segments. After a simple string search, 232,000 references to organizations in that ranking were found. This approach was chosen for its simplicity and operational efficiency, avoiding the use of more complex techniques such as Named Entity Recognition (NER) and advanced Natural Language Processing (NLP). While those techniques could provide greater precision, the decision not to use them was based on a strategy of agile initial analysis.

For instance, NER could differentiate a company name from a common adjective or identify indirect mentions and contextual references to organizations. However, this analysis opted for a more direct method, with subsequent adjustments and refinements planned for later stages.

To reduce false positives and refine results, a semantic and contextual filtering technique was applied based on the following criteria:

- Generic or Common Names: Companies whose names are widely used in everyday vocabulary or words frequently appearing outside the corporate context.

- Short or Ambiguous Acronyms: Companies identified by two- or three-letter acronyms that could be confused with other technical abbreviations or common expressions.

- Polysemic Words: Companies with names that have multiple meanings in different contexts, often used to designate experience levels or other general terms.

After this cleanup, the number of mentions dropped from 232,000 to 16,000, representing only those with a high probability of being legitimate corporate references.

The sector analysis revealed interesting patterns regarding corporate use of AI tools. The insurance sector led with 2,139 mentions, followed by pharmaceutical and beauty with 1,886 references. The transport and logistics segment ranked third with 1,755 mentions, followed by food and beverage with 1,549 citations. The financial sector, combining banks and diverse financial services, totaled about 2,938 mentions, demonstrating a strong dependence on these technologies.

| Sector | Total Mentions |

| Insurance | 2139 |

| Pharmaceutical and Beauty | 1886 |

| Transport, Logistics, and Logistic Services | 1755 |

| Food and Beverages | 1549 |

| Banks | 1476 |

| Financial Services | 1462 |

| Education | 1021 |

| Agribusiness | 837 |

| Wholesale and Retail | 657 |

| Petroleum and Chemical | 657 |

| Capital Goods and Electronics | 604 |

| Health and Healthcare Services | 462 |

| Cooperatives | 438 |

| Steel, Mining, and Metallurgy | 389 |

| Fashion and Apparel | 317 |

| Investments and Media | 285 |

| Technology and Telecommunications | 254 |

| Energy | 169 |

| Real Estate and Construction | 140 |

| Paper and Pulp | 42 |

| Health Insurance Operators | 33 |

| Sanitation and Environment | 1 |

This sector distribution suggests that OmniGPT has been extensively used for various corporate purposes, ranging from software development and process automation to data analysis and customer support. The significant presence of regulated sectors, such as insurance and financial services, raises additional concerns about compliance and protection of sensitive data.

Conclusion

These findings reinforce how the use of AI solutions spans multiple business segments, while also exposing weaknesses in how corporate data is handled. More and more, development teams and innovation areas are leveraging the productivity offered by advanced models, but it is essential to adopt good security practices in return. It is not about avoiding AI use but rather creating processes that mitigate the risk of leaks — for example, masking genuine tokens and keys before submitting code snippets to any model or assistance platform.

The OmniGPT incident serves as a crucial warning about the inherent risks of adopting AI tools without adequate security controls. While these technologies offer undeniable benefits in terms of productivity and innovation, establishing a balance between agility and data protection is vital.

The sheer volume and nature of the exposed information demonstrate that corporate use of AI must be accompanied by a robust security strategy. This includes not only technical protection measures but also a cultural shift toward safer information-sharing practices.

As AI adoption continues to grow, incidents like this will likely become more common. The ability of organizations to protect their data while reaping the benefits of these technologies will be a key competitive differentiator in the coming years. The OmniGPT case should not be viewed as an isolated incident but rather as a catalyst for a broader discussion on security and governance in corporate use of artificial intelligence.